#29: 🧠The Battle for Generative AI Has Begun⚔️

A Glimpse Into The Future Of Your Personalized AI

Every company in the world will have a customized AI model customized for their business.

It is inevitable.

But AI is itself trained on data. Where does that data come from? How can it be customized?

Artificial intelligence can take in massive amounts of data and provide insights on the data almost instantaneously (this is what has caused the latest AI hype with LLMs such as ChatGPT).

Data improves the quality, efficiency, and impact of business operations.

How do we know this data is accurate, non-biased, and intellectual property safe to use?

The short answer is, we often don't. AI models are created with training data that is often not made public. Even if it is made public, the sheer volume of data used to train these models is staggeringly large and can be very difficult to comb through.

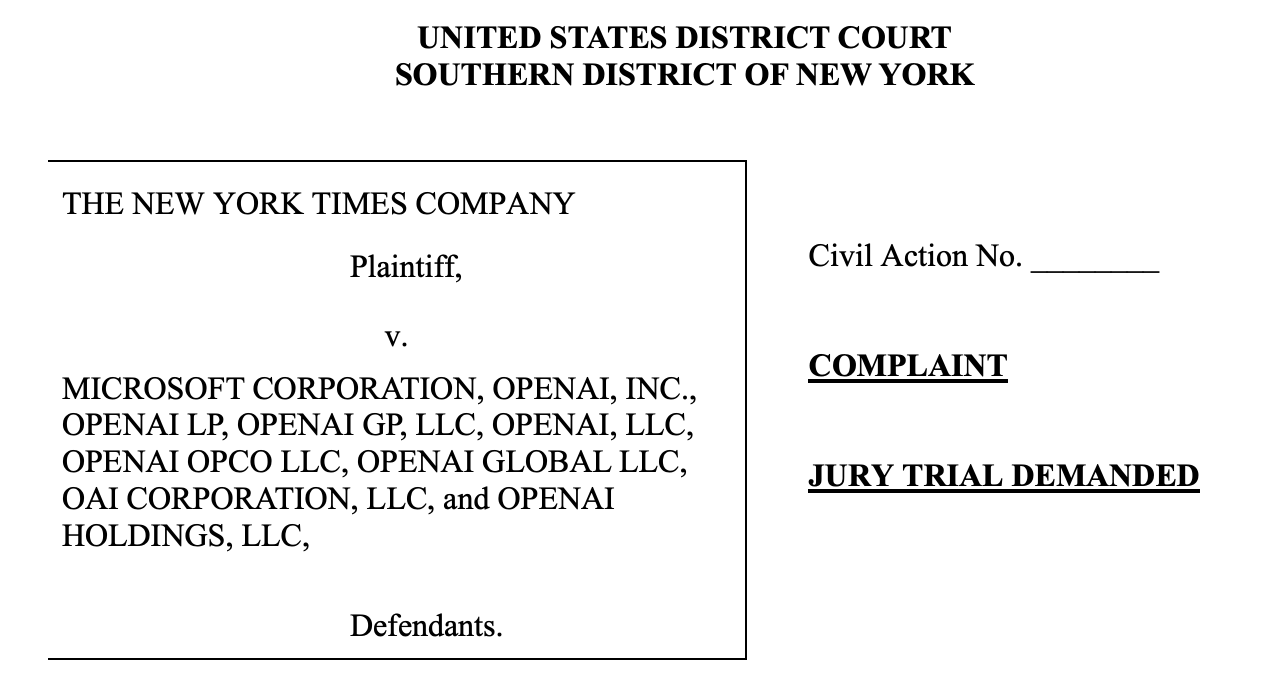

In the interest of transparency, some companies publish their training data. Last week, the New York Times sued OpenAI for copyright infringement based, in part, on this published information.

The NYT database is the largest proprietary database used to train GPT-3, the model used by ChatGPT. In other words, the original works created and owned by the NYT were used to train GPT-3.

This case will likely have a huge impact on the future of generative AI (how we treat the creative process, publishing information online, and rules AI must follow in the future).

As an intellectual property lawyer and patent agent, I care very much how the creative and intellectual works of others are treated by AI. I care about the protection of ideas and innovation.

That said, without getting into the copyright and legal issues, there are ways we can begin preparing for an AI world that are independent of how this case turns out.

Whether you are a creator, knowledge worker, or just someone hoping to use AI to make your life easier, there are steps you can begin taking now that will help future-proof your ideas, regardless of what happens in the battle for generative AI.

Let's dive in ✨

Recalibrating Recap

Welcome to Recalibrating! My name is Callum (@_wanderloots)

Join me each week as I learn to better life in every way possible, reflecting and recalibrating along the way to keep from getting too lost as I build my new world.

Thanks for sharing the journey with me ✨

Last week, I created a checkpoint, a map of Recalibrating. This checkpoint includes a brief summary of each entry published in 2023, a resource index if you will.

Over the next few weeks, I am going to play around with the structure of Recalibrating. Having launched YouTube, audio entries, and the Worldbuilding practical portion of Recalibrating, I am experimenting with new ways to balance my time while still sharing the most valuable information with you (see a few new sections at the end of this entry).

2023 was a year of exploring what it means to be human through evolutionary psychology using Maslow's Hierarchy as a base model to craft a sustainable creation system. 2024 is going to be a year of practical building for a new world that is innovating faster than ever before.

Thank you so much for your support over the last 6 months, it means the world to me 😊

If you have any suggestions for formatting this newsletter or topics that you found particularly helpful, please let me know. Your feedback is more helpful than you know 🫡

Now, let's take a look at the most historic case against generative AI thus far and how you can begin preparing for an AI world regardless of what happens in the battle between tech giants.

Let the future-proofing begin 🧭 🕰️

The Bigger Picture (why you should care)

The tech giants will continue battling to have the "best" AI foundation model (whatever that ends up meaning in the future). AI models may or may not continue infringing copyright. These issues are still up in the air.

For the average person, these issues are irrelevant. Does it matter to you if Google, Microsoft, OpenAI, Amazon, or Meta win the battle for "best" AI model?

Likely not.

(though Meta's data policies in the past still greatly concern me and my faith in the democratic process)

What will matter to you is generating data in the first place (your own proprietary data - intellectual property) that can be used to fine-tune (customize) your own AI assistant. Most people are not thinking of this, let alone building it.

Perhaps equally as important, the structure of this personal data system will matter so that you can future-proof your system. Imagine OpenAI goes bankrupt and you've only built a dataset that works with their model. Like trying to use an iPhone lightning cord with an Android device.

We want to construct a universal dataset so that, whoever wins the battle for generative AI, you can unplug from old models and plug into the new "winning" model. Like using USB-C for everything.

But before we get into these issues, let's take a look at the current state of AI with one of the best cases of proprietary data vs generative AI thus far.

New York Times vs OpenAI

The issue here is in the foundation model GPT-3 by OpenAI. The foundation model is what is trained on much of the Internet, with or without the consent of the copyright owners, to produce the LLM (large language model) that we interface with as part of ChatGPT.

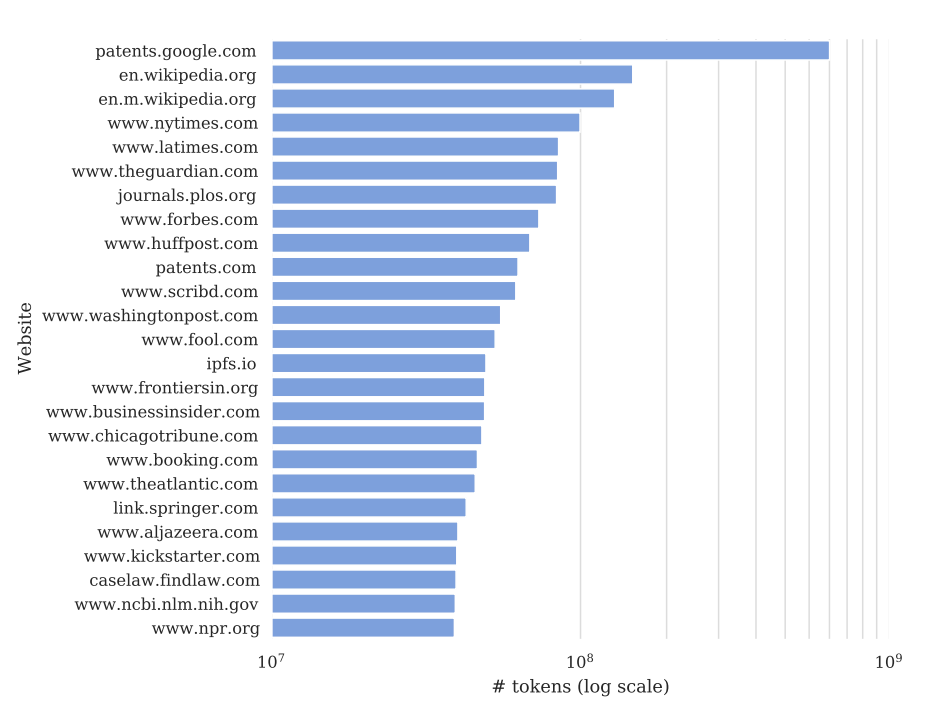

The NYT is the largest set of proprietary data used to train GPT-3 ⬇️

Proprietary data is essentially "data that has restricted rights so that the ability to distribute the data is limited by the owner". In other words, if I have proprietary data, you can't take it or use it without my permission.

As you can see in the chart, many other newspapers were also scraped for training data. Interestingly, IPFS (international planetary filing system) was also scraped, which is also known as the "perma-web", a blockchain related decentralized storage system - more on that later.

The main issue here is whether AI companies are allowed to use copyrighted or proprietary data to train their AI. This proprietary data will include online data produced by companies, but also online data in the form of images, music, video, etc. that individual creators have uploaded.

This case is quite literally dealing with the future of the Internet and AI. This case is also why I have exclusively used Adobe Firefly for my image generation, since it was trained on copyright free/licensed images.

Can tech giants take whatever they want to train AI? Do they play by different rules than the rest of us? Is it in the public good to stifle innovation to protect individual ownership?

While we don't know the answers to these questions yet, we can know that the outcome of this case will have a large impact on the innovation of generative AI moving forward.

Data is clearly one of the most valuable resources in the world (look at how quickly OpenAI rose to the top as a tech giant).

The current case is dealing with information that is scraped from websites (publicly available). What about data that is not published online?

How can you future-proof your own data generation for an increasingly AI world?

I could dive deeper into the IP aspects of what is going on here, but for now, I am going to paint a bigger picture of why this situation matters in the first place.

✨ If you are interested in me writing another article on the legal/IP issues, let me know!

Battle of the Tech Giants (Not You)

For most people, it doesn't matter which AI company wins the generative AI battle.

Most people don't generate data valuable to themselves. Instead, they generate Big Data by signing away their privacy in order to use apps (Google Maps, Instagram, Facebook, almost all social media, etc).

Big Data is something you give away freely, so you can't complain when it is being used for training AI models (unless there is something in the terms of use that prevents it).

When the product is free, you are the product.

However, Big Data is not the only data being generated. At your job, you generate data by producing emails, launching campaigns, conversing with clients, selling products, etc. This data forms part of a proprietary data set that your company will almost certainly use to train or fine-tune an AI model in the future.

But what about your own AI system, independent of your company?

In the future, each of us will have a personalized AI system. How do you want it to be trained? Which AI model do you want it to be compatible with?

There are ways we can future-proof ourselves in the battle of generative AI.

We do not have to play the games of the tech giants. As individuals and small businesses, the specific foundation model we use in the future is not going to have a large impact on the quality of our AI systems IF we plan accordingly.

What will have a large impact is our ability to structure our data so that it is model-agnostic (cross-compatible). I want to be able to unplug my data from an AI model if it is deemed commercially unsafe, immoral, or biased and plug it into another model that is meets my criteria.

How can this be done?

✨ The battles of the tech giants and newspaper empires will have no impact on you as an individual if *you are not generating any valuable data in the first place*

Note: the practical building components of this system (my second brain, digital mind) is what I am discussing with my paid subscribers in Worldbuilding. If you are interested in learning more how I am building my proprietary data system, please consider upgrading your subscription to paid.

Training Your Own AI Safely

The issue with NYT vs OpenAI is that OpenAI has used copyrighted data to train their GPT model. As a company that is trying to take on Google, that makes sense. They needed all of the data they could get in order to catch up to the massive data systems Google has been building for 25 years. Whether that is legal or not may take a while to decide.

If OpenAI loses, they will likely have to pay a lot of money to the NYT and I'm sure other newspaper lawsuits will follow. They may even need to retrain parts of their GPT model or start over depending on what the courts decide.

But for you, an individual or small company, these consequences are of little import.

What would be of consequence is if YOU started training a model on copyrighted material and were sued by the NYT. Would you be able to afford it? (I know I wouldn't)

The goal here is to understand (a little) what it means to train an AI system safely and how you can get started today to plan for your future.

My Vision (Future-Proofing Myself)

In the future, we will all have a personalized AI. A copilot. An assistant. A manager, social media creator, marketer, advisor, writer, etc.

Whichever wording resonates most strongly with you, start thinking of your future AI system like that.

Imagine Siri or Alexa, but if they actually worked to do what you wanted them to (instead of yelling at your phone or smart speaker and getting basic or useless information back). This AI copilot is not going to be a search engine that can hear your voice and run a Google search for you. It is actually going to do what you want it to.

Think more like Jarvis in Iron Man than Siri. Jarvis is an AI system that runs the inside of Iron Man's suit to answer questions, run diagnostics, do research, run the machinery of the suit, etc.

The key here is that Jarvis is used to solve Iron Man's problems as they arise (or even beforehand, predicting his needs).

Imagine that, as you think of problems, you can speak them out loud, and the answers would be given to you by your AI assistant. These answers can be tied to your emails, writing, videos, photography, or any other form (multi-modal AI systems). Tied to your existing data and able to access the Internet.

The output of these answers will automatically be sorted into their proper filing/project management systems so that the AI system recursively learns and you know where to find the information in the future. For example, when discussing your plans for next week, the AI will automatically structure your weekly calendar, send emails, build a to-do list, etc.

I could go on and on about my predictions for these AI systems and how I envision them (and I will, later), but for now let's stick to more concrete reality.

Apple's Vision Pro (Spatial Computer)

While not yet released, I imagine that Apple's Vision Pro will be something along these lines. Maybe not in its first iteration, but in the next few years. TBD.

If you have not yet watched the Vision Pro Keynote, I highly recommend you do. It will help you understand the future of computing much better, much faster.

The Vision Pro is like a Mac for your face.

As Apple puts it, its the first product that "you look through instead of at". A spatial computer, one that operates without a screen in 3D.

The Vision Pro is both augmented reality and virtual reality, with the ability to toggle between the two realities. You can go from floating in outer space to sitting on your couch watching a TV the size of your house by turning a dial.

The Vision Pro is a goggle-type device that can be controlled using your eyes, attention, and fingers (no controller needed). When you are about to click on something with a mouse, your pupils dilate in expectation of the result of the click. The Vision Pro analyzes your eye motion to detect this dilation, which means you can literally click on things by thinking about clicking on them.

Pretty mind blowing 🤯 (and also a bit scary). How good are you at controlling your own attention 👀 maybe you should start taking mindfulness more seriously.

However, I believe that the true power of the Vision Pro (or similar VR/AR systems) will come when paired with an AI assistant. Think back to Iron Man putting on his helmet and being able to converse with Jarvis.

We evolved to use our voice to convey messages of importance, while we walked around the world. Humans were not meant to sit hunched over at a computer, interfacing with a machine using a keyboard and mouse. This is literally causing de-evolution, according to Breath.

AI will help usher in this modern natural way of interfacing with machines. We will be able to look at things, have our AI track our eyes and know what we want to click on, and use our voice to tell our AI assistant what we want it to do.

Having had this prediction for a while, it came as no surprise to me that Apple recently announced their open-source LLM: Ferret. More on local LLMs and computing later.

With the Vision Pro being released later this month in the US, I think Apple has begun setting the stage for their new superpowered AI spatial computing system.

Augmented spatial computing.

The future ✨

Training Data Tiers

In the future, we will all have personalized AI. I want my AI to be just that: personalized.

To personalize my AI, I need to create data that is unique to me. Perhaps this data is copyrighted (copyright arises naturally through common law, though registration can be helpful). At the least, I can make the data proprietary and prevent the tech giants from taking it (if I know what I am doing).

This personalized data can be used to create a unique dataset that can fine-tune the AI model of whichever tech giant wins the battle for generative AI. By creating a custom dataset, even if the AI company goes bankrupt, I will have the core, the essence of my ideas that can be used to retain a new model in the future (depending on how you build your second brain, but more on that later).

Let's leave the creation of the AI foundation models to those with the computing power and knowledge to do it. Individuals and small businesses don't have the resources to build these models, and that's okay.

Instead, focus on what you can control. Only you can build a dataset that will enable your AI assistant to help you like you want it to (especially when plugging it into your spatial computer).

To help you visualize what I mean by data, let's consider three different training data tiers:

Public (my newsletter/blog, Instagram, Pinterest, YouTube, podcast, etc.)

Semi-Private (paywalled content for my paid subscribers)

Private (my second brain, digital mind, personal dataset made of my notes, audio, video, and photos).

I'll explain more of these different tiers next week with the analogies of digital streams, campfires, gardens, and private second brains.

For now, I want you to consider that ChatGPT is currently trained on EVERYTHING (almost). That means its answers to your prompts are rooted in generalized knowledge.

If you can begin crafting a system with customized knowledge, you are well on your way to generating a dataset that will personalize your future AI, augmenting your reality.

Are you ready?

Next Week

I hope this entry provides an overview on data systems and the legal issues generative AI is currently facing.

There are so many rabbit holes I could go down to explain these different concepts and I know I merely touched the surface.

If you are interested in a specific topic I mentioned in this entry, please let me know (by commenting on this post or replying by email). Your feedback truly helps me more than you know.

Next week, I'll explain more about the different data tiers and how you can better wrap your head around the future of computing and AI.

Stay tuned ✨

P.S. If you are interested in learning how I build my digital mind (second brain) to help me process information and identify patterns to solve my problems, please consider upgrading your subscription to paid. Your support means more than you know 😌 ✨

Paid subscribers get full access to Worldbuilding, a practical counterpoint to the theories described in Recalibrating. You also get access to a private chat and bonus explanations exclusive to paying members 👀

If you are not interested in a paid subscription but would like to show your support, please consider buying me a coffee to help keep my energy levels up as I write more ☕️ 📝

Book I'm Reading This Week

This year, I have a goal to read 52 books. One of my dreams in life has been able to read for a living, and I'm trying to make that happen by reading books and providing commentary on my YouTube channel.

This week's book is Feel-Good Productivity by Ali Abdaal.

Since burning out at my job, I have been a bit afraid of getting into the habit of over-productivity again. I am really am enjoying Ali's perspective on how to make productivity enjoyable to reduce stress and increase output.

FYI when I went to get a link for this book to share with you I noticed that it is currently 31% off on Amazon

Scary that they use the New York Times. I hope they don't use their opinion pieces.