News This Week: OpenAI Claims AI Training Is Impossible Without Using Copyrighted Data

The world is changing.

Even our understanding of "theft" is becoming more nuanced.

Pre-Internet, it was much easier to determine if someone had stolen your copyrighted work. You published a book, a painting, a newsletter, an article, etc. and it was attributed to you. If portions of that work appeared in public elsewhere, you knew exactly where to find the original work, you knew who to bring to court.

Post-Internet, copying has become murky. Artists, writers, knowledge workers (creators as a whole) have their works stolen constantly, with often no recourse. These copiers are global, making it a jurisdictional nightmare to try and deal with the varying laws in different countries.

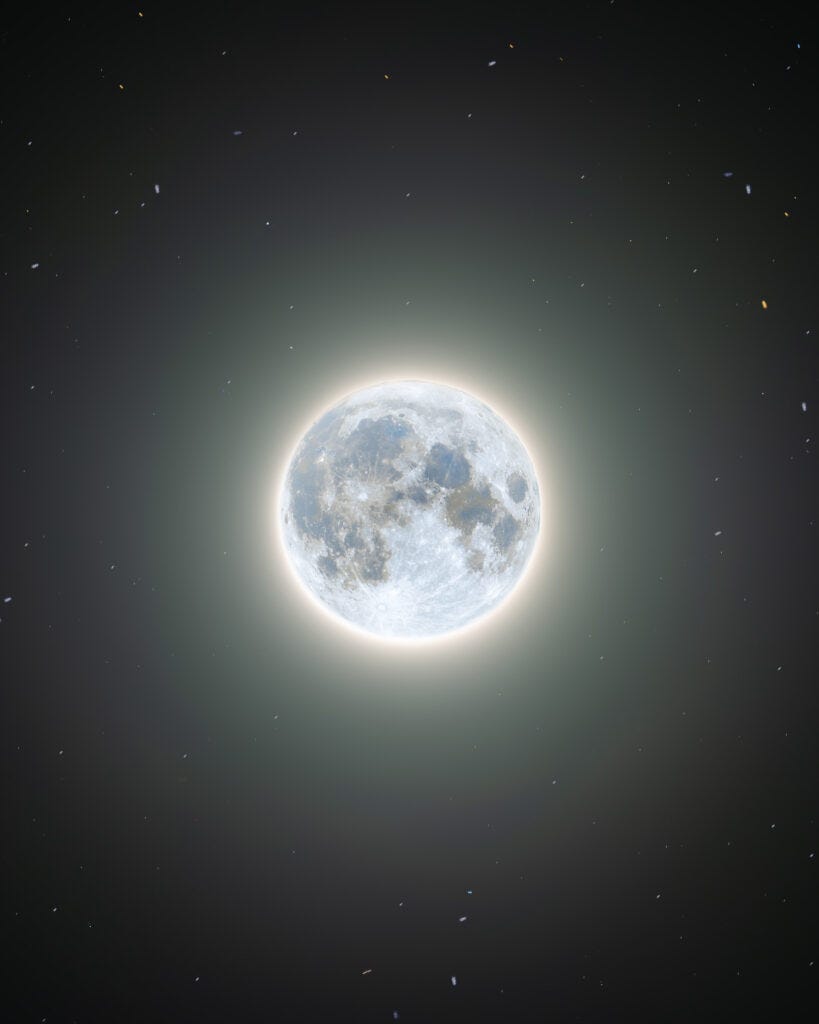

For example, here's a post I wrote about my lunar eclipse photo that was stolen and repurposed on Twitter, getting more than 40,000 likes and thousands of shares. They got hundreds of followers for that photo, I got 2.

Sure, you can file a DMCA (digital media copyright act) takedown request, but that may take a while and there may not be financial compensation for the theft (who is "moon.patrol"? 🤷♂️)

The alternative is to sue the copier, but they are often an anonymous aggregator account that you would have great difficulty finding the person behind it (assuming you could afford the legal fees in the first place).

Post-AI, this issue is becoming impossible. How do you find the original source of the information, let alone how much of it was taken when your work was compiled into a batch of 100,000,000 other documents used by machine learning to train the AI model?

This week, OpenAI actually claims it is "impossible" to train AI without copyrighted materials.

Having read their argument, I am not sure that I agree, though I do see their point. It's complicated.

Interestingly, OpenAI has also opened up two new features of ChatGPT that I believe are directly related to what I spoke of last week regarding the future of personalized AI assistants. Issues are beginning to align.

Let's dive in ✨

P.S. there are a few other interesting tech developments this week, but I'll add a separate section at the end so we can stick with the topic of copying today 🫡Recalibrating Recap

Welcome to Recalibrating! My name is Callum (@_wanderloots)

Join me each week as I learn to better life in every way possible, reflecting and recalibrating along the way to keep from getting too lost as I build my new world 🌎

Thanks for sharing the journey with me ✨

Last week, we touched on the battle for generative AI with the New York Times suing OpenAI. I explained my vision for the future and how we can start preparing our own personalized AI assistants coming in the near future. To help visualize this future, I explained my predictions for spatial computing and how I think the next year is going to be one we never forget.

This week, we are going to continue by discussing data, artificial intelligence, and what it means to train AI with or without copyright. I will outline the context for why you should care about the different ways your information is being used, including some practical suggestions on how you can begin preparing yourself for an AI world that is within your control ✨

Also, I want to share that I've posted my third YouTube video and it has been performing better than any other video I've ever made! It seems I am not alone in looking to recover from burnout and build a better world for myself 😊

If you watched the video, please consider leaving a comment on YouTube or sharing the video with someone else you think could benefit 🫡

The Bigger Picture: "Impossible" AI Model Training

Our understanding of value is shifting.

For much of human history, theft was theft. Taking someone's creation (creative or knowledge-based) and using it without their permission made you liable for legal consequences (destroying the copy, compensating the original author, paying a fine, etc.)

But how do we treat AI training models that are created from millions of authored works?

How do we treat AI models that output a generative creation, not copying your original authored work per se, but using a small portion of it? Think of an echo of the original that has been mixed with the echos of thousands of other creators to create something "new".

These models have been created by scraping almost the entire Internet, much of the publicly available data in the world.

If you wish to retain the value of your own creations (whether for personal use or within your company), you must begin considering the consequences of sharing your work in public.

If OpenAI has their way, everything you put out online has the potential to be used in AI training models (creators can opt-out of using their websites for training AI if they wish, more on that below).

But, perhaps even more concerning, if corporations have their way, I expect they will begin creating AI employees based on the training data that you are giving them as part of your job.

AI has the potential to make many people's job's obsolete. This is what is causing AI-nxiety and caused the writer and actor strikes in 2023.

So what does this all mean?

It means that we each need to start paying more attention to the way we consume, create, and generate value in our society.

We need to be more mindful of our actions and the way we share information.

We Are All To Blame

The development of the Internet is a fascinating topic, one of the most interesting human psychological experiments, ever. Everything we put online stays there (for the most part), which means we are generating more and more data every year. The rate at which we are generating data is also exponentially increasing 👇

Unfortunately, there has been a social shift of value with the current Internet system (referred to as web2; for more details on this topic, I have an Internet and web3 FAQ here).

With the development of social media, consumers have become accustomed to everything online being free. I blame Facebook and Instagram for much of this perception, though of course they do have their benefits and seem to be changing.

When you want an app but have to pay for it, you likely do not purchase the app. There is almost always a free option (even if it is not quite as good). You have become accustomed to almost everything online being free.

As a society, we have been trained to expect free content 100% of the time.

Unfortunately, this is greatly damaging for our modern understanding of "value".

Part of this devaluation comes from web2 (social media) companies. They have trained us to think that we deserve free content (data, creations, knowledge), which has led to the devaluation of creation and the effort that goes into it. Creators have to put out "content" constantly in order to keep up wtith the demand of the algorithm, and their audiences.

In a related vein, part of this devaluation comes from the lack of digital scarcity. Anyone can copy and paste one of my photos and share it with anyone else. They can take this image and print their own fine art prints and start selling them, albeit at lower quality. Odds are I will never know (but please don't do this 😠).

Both of these reasons (among others) have led to the point where AI companies can make the claim that they deserve publicly available content for free in the interests of humanity.

But do they?

OpenAI & "Impossible" AI Training

OpenAI claims that it is "impossible" to train AI models without using copyrighted materials.

Effectively, OpenAI is saying that, sure, we can train AI models without copyrighted materials, but the resultant AI is not going to be very effective. It would be mostly limited to materials in the public domain, which are old and not useful for pushing the bounds of modern technology.

"legal right is less important to us than being good citizens" - OpenAIOne of my frustrations with the legal industry and intellectual property is how slowly they adapt to the changing times. Many forms of intellectual property are unable to keep up with the pace of innovation in the digital age.

Trying to apply IP policies based on rules that were created in the 1400's (interestingly, right after the printing press was invented) does not enable true progress of technology that is developing as rapidly as AI has been over the last few years.

Do we want an AI that is 100 years old? Or do we want AI that is up-to-date with modern technology, science, culture, global opinion, and world issues?

The answer is, I think, of course we want AI to be the best it can be if it is to be of any use to us at all.

But does that mean it must come at the cost of the individuals who are having their life's work put into training these models with no compensation?

Like much of life, the answer is not so simple. These issues fall within the gradient of grey between the black and the white.

It's Not Impossible, Just Complicated

OpenAI claims it is impossible to avoid the use of copyrighted information when training their model, but there is another tech giant that has seemingly managed just fine: Adobe.

Adobe Firefly is an image generation software, similar to OpenAI's DALL E that enables generative AI creations based on text inputs.

Here's an intro to Firefly video I created last year if you want to learn more:

Adobe Firefly has been trained on copyright free (public domain) and licensed works, meaning that Firefly is 100% commercially safe to use without fear of copyright infringement. Adobe also has a compensation system in place to pay creators who submit works to Adobe Stock for training purposes.

Adobe has also introduced content credentials, which tag the generated work with AI so that you know it is not a human-created image and enable "tamper-evident metadata".

This training philosophy is why I have been using Firefly exclusively to generate the images you have seen in this newsletter.

💡 If Adobe can manage to avoid copyright issues, why does OpenAI claim it is impossible?

Well, the difference lies, I believe, in the type of generated output. Firefly deals with images, OpenAI is deals with both images (DALL E) and text (ChatGPT). It is multi-modal.

While of course images have certain trends, styles, and quality developments over the years, images are often much less time-sensitive than text.

When using ChatGPT to answer your questions, you likely want the most up-to-date answer possible (otherwise you would just go back to the old way and Google it).

If OpenAI is unable to use recent information to train its models, the efficacy of text-generation declines relative to how long it takes information to be included in the model.

The AI model becomes out-of-date very quickly, which defeats the whole point of having cutting-edge AI in the first place (a main point of OpenAI's argument against the New York Times).

Opt In vs Opt Out Methods of AI Training

To help address the issue of up-to-date information being used to train their AI models, OpenAI offers opt-out functionality. Companies and creators can choose to have their websites excluded from OpenAI's web scraping when gathering information to update their model.

You can see below that SubStack has the option to flag content as not available for training AI models and prevent search engines from indexing paywalled content (though there are consequences of publication visibility down the road).

The Opt-out option enables creators and companies to choose if they wish to contribute their works to the training of modern AI systems like ChatGPT and Google Bard.

While this does not solve the "theft" of data used to create the original models over the last 5 years, this option does enable choice moving forwards.

However, did you know that this was an option? Probably not, most people don't.

An Opt-out policy requires awareness of the problem and of the solution, both of which are lacking in almost everyone.

While I appreciate what OpenAI and Google are doing by giving creators choice, they are setting the default to be one of theft, rather than a default of not theft.

The onus (responsibility) should not be on creators to opt-out of having their work taken by companies for training AI models. The default should be flipped to an opt-in model rather than an opt-out model.

An opt-in model would ensure that that NO websites are scraped without their permission, letting creators and companies choose if they wish to contribute to the furtherance of AI innovation.

That said, I am trying to monetize and build a living off of these online creations. Why would I give it to AI models for free?

Doesn't it make more sense to establish a compensation model to incentivize people to contribute their hard-worked creations to the training of tech giant AI models?

Pay Contributors and Track Usage

As I mentioned earlier, one of the issues with the way we currently use the Internet is that there is no scarcity with digital creations. Anyone can right-click-save, copy paste, or screenshot, an image, writing, or video. 🫰 The digital work is able to be duplicated without the creator's permission.

If only there were a way to demonstrate who had the original digital work so that we knew if there was a copied version...

If only there was a way to create a certificate of authenticity for a digital work, stamping it with the original creator's seal of approval...

If this way existed, companies like OpenAI would be able to trace the original work across the Internet so that they knew who to compensate for contributing to the training of their AI model.

OpenAI would be able to share the profits of their AI models with the people that contributed to its training.

This way exists. It's called blockchain.

While I know blockchain is a complicated topic, consider that blockchain is NOT complicated for machines to read.

Blockchain was made for AI, and AI was made for humans.

Blockchain 🤝 AI 🤝 Humans

It is a natural solution to our internet problems.

But how does it work?

A (Very) Brief Intro to Blockchain

I'll get more into depth into blockchain later (I have my certificate in blockchain law and do blockchain consulting so there are a lot of avenues to discuss).

For now, you can think of blockchain as a way to introduce digital scarcity (a MUCH bigger deal than most people realize). This was never possible before.

Think of the Mona Lisa. Why is it so valuable? Well, because there is only one and it resides in Le Louvre. All other copies in the world are derivative (based on the original), but are not the original itself. These copies sell for $20 instead of … 🤷♂️ priceless?

The reason the Mona Lisa is so valuable is because it is the only one and has been verified to be the original by Leonardo da Vinci (though the verification of traditional art involves a lot of trust... that's an issue for another time).

With digital works, the default is that there is no way to trace originality. Blockchain solves this problem.

Blockchain adds a signature to the digital work to say that this is 0001, the original creation. If someone copies it and creates another one, it has a different signature and a different number.

There is no way to fake the signature.

AI will be able to use these signatures to determine who the digital creator was, while also providing an economic system to transfer funds to the creator for using the work in the training of the AI model.

If you are interested in learning more, I wrote an article explaining the concept of digital scarcity, NFTs, and mental health in this article on Digital Collectibles.

Curate Your Data Sources More Carefully

Now let's start thinking a bit more practically about the data you are creating and how you can retain control of its use. I originally planned for this section be the sole topic this week, but realized I needed to provide more context on the AI issues first.

I'll get deeper into these systems next week and as a part of Worldbuilding (paywalled content, which is another way I can keep my writing away from AI models, they would have to pay to see it 👀).

In my opinion, there are limited options on how to deal with unauthorized use of information online:

never post your work in public, ever (not really possible if you're trying to build a personal brand or company);

put your work behind a paywall (possible, but goes against the philosophy of a free Internet, should be used strategically);

be more selective about what you post online (keep some of it offline until you are ready to monetize);

mint your work onto blockchain with your own smart contract (will explain this more later, but it creates a time-stamp for your works that will forever be there)

learn not to care about digital theft.

I have a YouTube series on how to mint your own smart contract if you are interested in seeing how it is done:

My Data System Solution

My solution is a mix of the above (except not posting, that's not an option for me). The way that I think of the different forms of posting/not posting is through the concepts of a digital stream, digital garden, digital campfire, and digital mind (second brain).

Each of these is a part of the system I am building to intentionally craft my data structures so that there are different tiers of availability to the public (including to AI models).

My solution to the public AI training model problem is not one-size fits all. It is a blend of different walls for your data so that only the information you want to get out, can get out.

The rest stays within your digital mind (second brain), your private proprietary dataset that is used to train your own personal assistant to retain your unique perspective (or your company's, depending on what you are building).

Which, incidentally, OpenAI clearly understands with their latest ChatGPT update: memory & learning:

If you want to stay ahead of the masses, you do not want to use the same personalized assistant that every other person will use. You won't be able to stand out that way. You will want to learn how to structure your own assistant that understands what you want from it.

There is a reason I have been writing about learning being the most valuable skill you can ever learn.

It is the skill that lets you learn all other skills.

Your unique learning style and expression of what you learn is going to be your most valuable resource in the future.

You will use this style and expression to teach your personal AI assistant to operate in a way found nowhere else on the Internet, so that you can retain your unique value amidst our rapidly changing world.

The time is now to begin future-proofing yourself for an AI world.

It is already here.

Next week

Many of these topics require an article the length of a book to explain their nuances. I hope that you find my brief overview helpful.

I am working on a YouTube series that will go deeper into these topics to provide complementary context for Recalibrating.

Next week, I'll continue explaining the various forms of data creation and how you can begin preparing your own system for the personalized AI assistants that are coming.

Stay tuned ✨

P.S. If you are interested in learning how I build my digital mind (second brain) to help me process information and identify patterns to solve my problems, please consider upgrading your subscription to paid. Your support means more than you know 😌 ✨

Paid subscribers get full access to Worldbuilding, a practical counterpoint to the theories described in Recalibrating. You also get access to a private chat and bonus explanations exclusive to paying members 👀

If you are not interested in a paid subscription but would like to show your support, please consider buying me a coffee to help keep my energy levels up as I write more ☕️ 📝

Book I'm (Still) Reading This Week:

Feel-Good Productivity by Ali Abdaal

I am on the final section of this book, which discusses the three types of burnout and how to structure a sustainable system. This is a topic I have been interested in since I began this newsletter and I'm greatly appreciating Ali's perspective.

FYI when I went to get a link for this book to share with you I noticed that it is currently 29% off on Amazon

Photo of the week: Blue Supermoon (2023)

In Other News:

Apple Vision Pro release date announced (pre-order January 19, available February 2) Only for the U.S. 😞

Sony Spatial Computer announced (directly challenging Apple, should be interesting to see how it compares).

GPT Store Intro and the announcement by OpenAi. You can now create your own ChatGPT and list it on the "app store" with OpenAI.

Fascinating, this caught my eye! I will definitely be watching the video sometime after dinner tonight. Thank you!

Wow this is such a broad post! I love it! Folding in your videos is also a good move - I like it